Introduction to the PRECISION Prompt Framework™

Working effectively with large language models (LLMs) starts with how you frame your request. A well-structured prompt does more than tell the model what to do. It provides the right perspective, context, and boundaries to generate useful, reliable outputs. Poor prompts lead to vague, inconsistent, or even misleading results. Strong prompts allow you to harness the full potential of the model while saving time on rework.

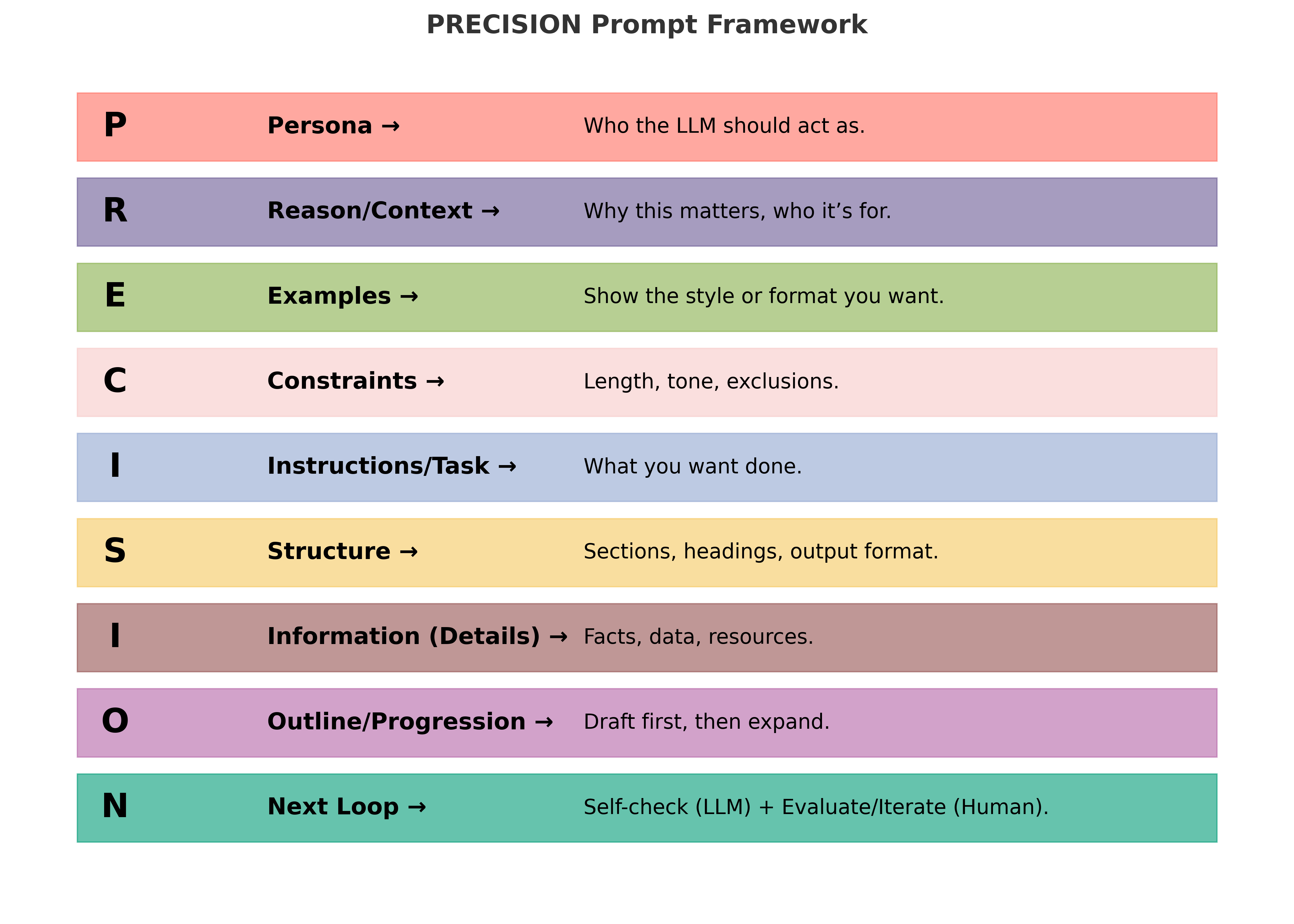

The PRECISION Prompt Framework was created to give practitioners a clear, modular approach to prompt design. Each letter in PRECISION represents a building block of effective prompting, from defining the model's role to setting constraints and shaping the output. Some elements are essential in every prompt, while others are refinements you add when the task is complex or high stakes.

The framework balances clarity, which ensures the model knows exactly what you want, with flexibility, which allows you to adjust how much structure you provide depending on your needs. At its core, PRECISION helps you:

- Provide the right persona and context so the model adopts the right perspective.

- Supply instructions and, when needed, information to ground the response.

- Refine with examples, constraints, structure, and progression to control style and depth.

- Maintain a cycle of iteration, with you, the human, always evaluating and improving the prompt.

By applying PRECISION, you can move beyond trial-and-error prompting into a repeatable practice. It turns prompting from an art into a craft that can be taught, refined, and relied upon.

One of the strengths of the PRECISION framework is its modular design. Some parts are essential for every prompt, while others are refinements you layer on when tasks are complex or high-stakes.

Core (Required)

These are the non-negotiables. Without them, the LLM won't have enough guidance:

- Persona → Defines who the model should act as. Even a minimal role (e.g., “Act as a helpful assistant”) anchors the voice and perspective.

- Reason/Context → Explains why the task matters and who the audience is. Prevents vague or generic answers.

- Instructions/Task → The heart of the request. Provides clear direction on what the model must do.

Conditional (Depends on the Task)

Use when the LLM needs to be grounded in your reality. Skip when you're only asking for general knowledge or creative ideas.

- Information (Details) → Facts, data, or references the model should use. Required for context-specific or fact-based tasks; optional for general or creative prompts.

Optional (Refinements)

These sharpen or polish the output. Add them when you need structure, consistency, or extra quality control:

- Examples → Guides style and format (e.g., “format it like this…”).

- Constraints → Sets boundaries like word count, tone, or exclusions.

- Structure → Defines the output shape, such as sections, headings, or lists.

- Outline/Progression → Encourages step-by-step reasoning before the final output.

- Self-check (LLM) → Instructs the model to review its own work. Helpful for extra quality control, but not essential since you will review the output yourself.

Always Required (Human Practice)

This step is not part of the prompt itself but is fundamental to good use of LLMs:

- Next Loop (Evaluate & Iterate) → You, as the human, must always evaluate the response and decide whether to refine the prompt. This is where judgment and iteration come in.

Quick Rule of Thumb

- Everyday prompting → Persona + Context + Task

- Fact-based/contextual prompting → Add Information (Details)

- Important deliverables → Add Constraints, Structure, and Examples

- High-stakes or polished outputs → Use the full PRECISION framework, including Outline/Progression and Self-check

- Always → Evaluate and iterate as the human in the loop